RoboKeeper

Created a robotic goalkeeper using computer vision and robotic manipulation.

Overview

In this group project, my team and I set out to create a robot goalkeeper! The robot used is the Adroit Manipulator Arm manufactured by HDT Global (https://tinyurl.com/ynb82wym). This project involved using computer vision to identify the location of a moving target with a top-down view camera, converting those coordinates into the frame of the robotic arm, and commanding the arm to intersect the target before crossing a defined goal line.

System Breakdown

In order to accomplish the task we set out to complete, we broke it down into three components:

1. Perception - Determine location of the ball relative to the camera

2. Transforms - Determine location of the ball relative to the robot

3. Motion Control - Control the robot to move to the desired location

Perception

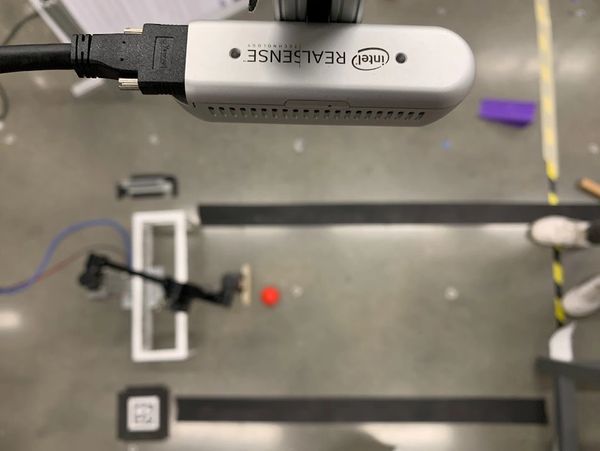

As any successful goalkeeper will tell you; you must keep your eye on the ball! In our case,

our "eyes" were an Intel RealSense depth camera mounted directly above the playing area and

pointed straight downward and the ball was a red foam ball.

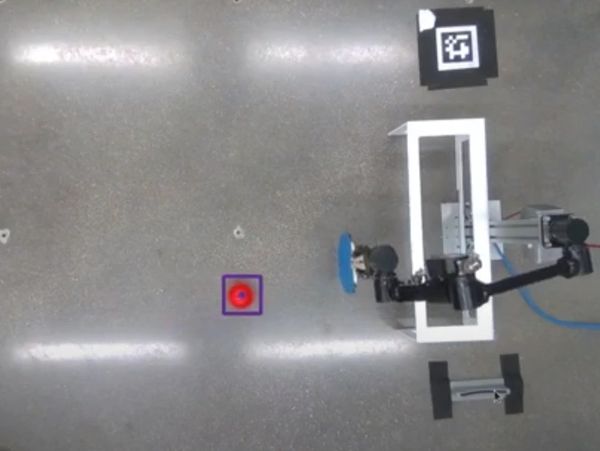

We used camera data from the RealSense in conjunction with OpenCV and the ROS CvBridge to

identify and locate a ball in frame. This was done by thresholding the image to search for red

objects that matched the HSV values of our ball. Once a mask image was formed, contours were

found around all the red objects in view (ideally just the ball) and any deemed too small were

discarded. With the contour around the ball the only thing left in the image, its centroid

location (i.e. the ball's location) in the camera frame could be easily determined.

This brief video demonstrates the use of OpenCV to locate the ball and determine the location of its centroid. Once determined, the ball is marked at its centroid and a bounding box placed around it.

Transforms

Knowing the location of the ball within the frame of the camera is great,

but it alone didn't help our robot much. In order to convert the ball coordinates

in the camera frame into corresponding coordinates in the robot frame, some coordinate

transformations were necessary.

In our particular setup, we know where the ball is relative to the camera (calculated

in the perception algorithm), but we needed something additional to link the camera's

location to the robot's location. The solution that provided this link was an AprilTag

(seen just below the goal in the above right image). This AprilTag could be detected

by the camera and its location relative to the camera determined via its associated software.

Then, by hand-measuring the location of the AprilTag relative to the base of the robot,

we then had the complete set of frame relationships necessary to calculate the location

of the ball relative to the robot.

Motion Control

Finally, after determining the location of the ball relative to the robot's frame,

the robot can then be controlled to accomplish our goal. The end-effector of the

Adroit arm is programmed to essentially mirror the y-coordinate of the ball as it

moves towards it while maintaining a fixed distance between itself and the goal. The

Adroit's joint angles corresponding with these various y-coordinates along the front

of the goal were recorded in a lookup table at a resolution of 1 cm. Essentially, this

means that the ball's current y-coordinate in time is matched to the nearest y-coordinate

in the lookup table, and the corresponding joint angles are directly published to the Adroit.

The final result is a fun, interactive goalkeeping robot that is surprisingly challenging to defeat!

The RoboKeeper Team!