Automated Biomimetic Fingertip Deformation Testing System

Created automated system for gathering biomimetic fingertip motion data and the associated deformation characteristics.

Overview

In this solo project, I worked with Northwestern University professors, Dr. Matthew Elwin, Dr. Ed Colgate, and Dr. Kevin Lynch to help further their goal of investigating the properties of ideal biomimetic fingers and their ability to interact with their environment.This project used a Franka Emika Panda robotic manipulator arm to control a clear sliding platform as it interacted with a prototype silicone biomimetic fingertip. A high resolution camera was fixed below the platform looking upwards through it at the tip of the finger and an embedded fiducial pattern. The motion profiles of the robot and the detected fiducial pattern changes provide useful information about the prototype finger and its material behavior when interacting with a surface.

Hardware

Franka Emika Manipulator Arm

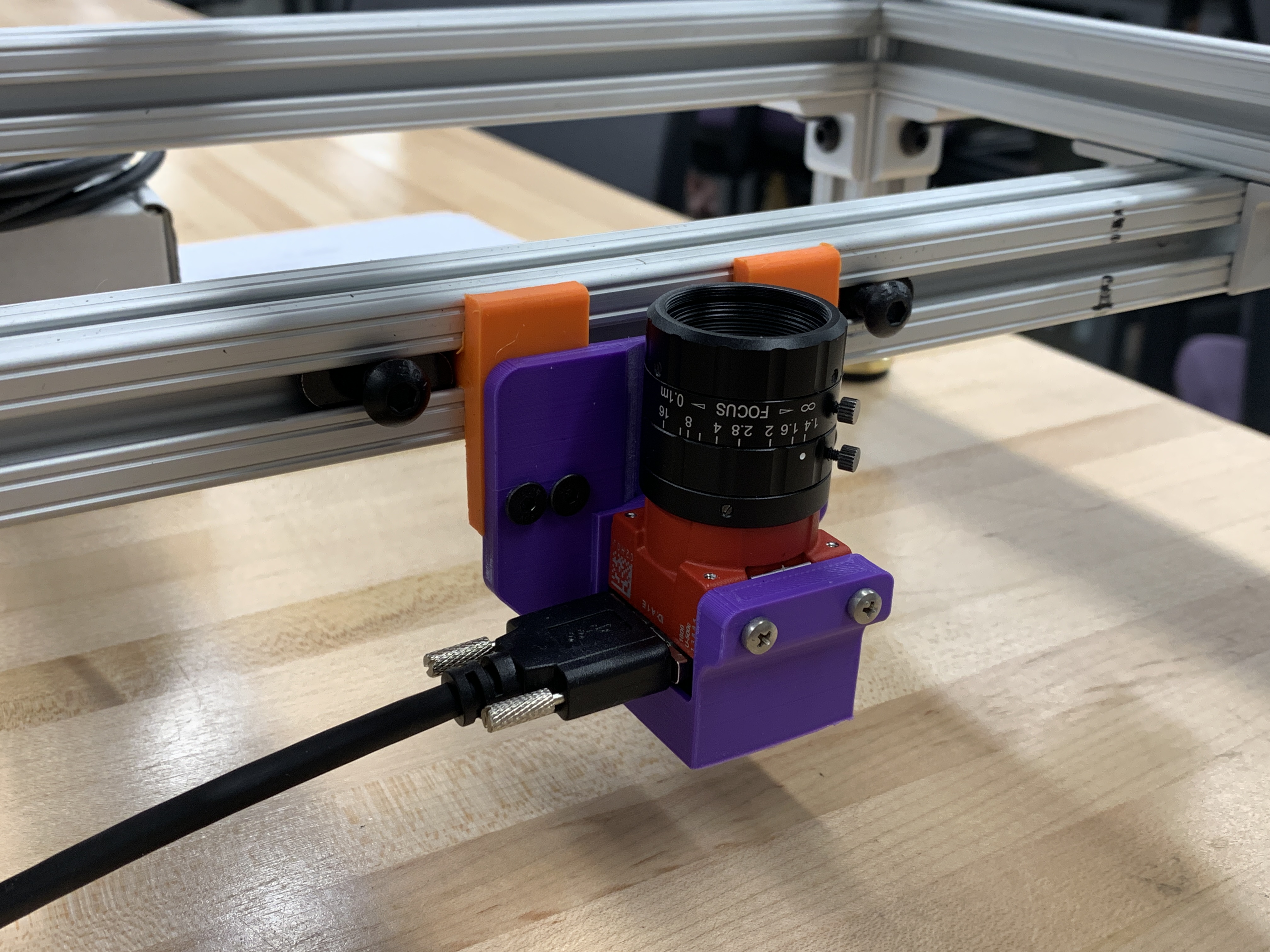

Allied Vision Camera

Fingertip Prototypes

Several iterations of fingertip prototypes were used over the course of this project as the needs of the system became more apparent over time. Effort was focused primarily on functionality within the needs of the testing system as opposed to creating fingertips that more closely mimicked human fingertips. The primary purpose of this project was to create a system that could ultimately be used to compare the properties of various prototypes in efforts to create better biomimetic materials, not necessarily to create those biomimetic materials themselves. A key component of the fingertips that quickly became apparent was the need for some sort of fiducial system on the fingertip to allow for tracking of the fingertip surface as it slipped and deformed during manipulation.Testing Fixture

The physical testing fixture used in this project consisted of the fixed biomimetic fingertip prototype above a high resolution machine vision camera, with a clear acrylic sliding platform positioned between them. The reasoning for fixing the fingertip in place and actuating the platform rather than the reverse was to keep the fingertip firmly in frame of the fixed camera below the platform. Due to the minute detail needed to capture the deformation and slip characteristics of the fingertip, it made more sense to ensure the fingertip remained squarely in the camera's view.Software

- General

- Ubuntu 22.04 Jammy Jellyfish

- ROS2 Humble Hawksbill

- Franka Manipulator Arm

- MoveIt2

- franka_ros2 (ROS2 driver for Franka)

- Allied Vision Camera

- OpenCV 4.6.0

- cv_bridge

- Vimba SDK 6.0.0 (interface for camera)

- avt_vimba_camera (ROS2 wrapper for Vimba SDK)

Package Breakdown

This project was created in the form of a ROS2 package written primarily in C++. For organization, the various components of the project were broken up into four individual ROS2 packages, each with a specialized function:

- finger_rig_description

- finger_rig_bringup

- finger_rig_msgs

- finger_rig_control

This package contains the Rviz configuration file for visualizing both the Franka arm and the computer vision aspects of the project.

This package primarily contains the launch files necessary to run all of the necessary nodes, controllers, and configurations.

This package contains a few custom service types used for supplying commands to the robot.

This package contains the bulk of the project in the form of multiple nodes for perception, motion control, and environmental configuration.

Motion Control

The motion of the Franka robot was programmed and controlled using the MoveIt2 motion planning framework. The package is centered around a number of ROS2 services that allow the user to interact with and control the robot for the various tasks relevant to the project. Some of these services offered basic functionality for controlling the robot such as sending the arm to its home pose and opening and closing the gripper.

The remaining ROS2 services provided in the package serve to provide various motion profiles for the biomimetic finger to be actuated against. These motion profile services all start by first gripping the sliding platform that the biomimetic fingertip is resting against, then manipulating this platform to simulate as if the fingertip was sliding against a stationary platform in the specified motion profile.

Computer Vision

The goal of the computer vision in this project was to develop a way of numerically evaluating the slip and

deformation of a biomimetic fingertip as it slides along an object (the sliding platform in this case). This was

done by tracking the positions of the randomized fiducial pattern on the tip of the finger during the execution

of the robot-actuated motion profiles.

The fiducial tracking was done using an algorithm made up of several common features from the OpenCV library.

The color image published by the camera via the avt_vimba_camera ROS2 driver was first converted to grayscale,

then thresholded by pixel intensity to highlight only the darker fiducial pattern on the lighter fingertip. This mask

image was then cropped to include only the center circle of the image, as this was the location of the fingertip

within the frame of the camera.

Next, with the individual dots of the fiducial pattern clearly distinguished from the rest of the image, their contours were found using OpenCV's contour detection. Possible contours were kept within a certain reasonable range for the fiducial pattern to avoid any undesirable detections. Once detected, the centroids of these contours were found using OpenCV's included centroid identifier. These centroids are the key component of the image that was desired as their values (both globally and relative to one another) represent the movement of the surface of the fingertip.

After the centroids of the fiducial pattern are indentified, it was important to track their location as they move with the finger. Without tracking, the order of identified contours may change with each frame of the video, which result in a list of random coordinates each associated with some random fiducial dot. Some of the key information of interest with this project is the motion of these fiducial dots relative to one another (indicating deformation).

Initially, an attempt was made to use a legacy OpenCV class called MultiTracker that would take an initial set of areas of interest in an image in the form of bounding boxes, then track those areas of interest as the frames change over time. After struggling to get the desired results using this legacy class, and a lack of desirable documentation, the decision was made to create a custom tracker from scratch. This custom tracker takes in the initially identified centroid locations, then with every new frame, compares the newly detected centroids with the previous centroid locations. It then assumes that if the difference between any two centroids from the two lists is very small, then they must be the same centroid. This algorithm worked extremely well for the paper fiducial pattern used to test the method, as can be seen in the GIF below.

Though this tracking method works very well with the idealistic paper fiducial pattern, there were some issues when integrating with the real prototype fingertips. Due to the lower contrast and imperfect fiducial dots, the algorithm would sometimes briefly lose track of a dot. Once a centroid was briefly lost as a result of this, the tracking algorithm would then forget about this dot, replace it with a duplicate of another centroid, and never regain it, even if the same centroid was then re-identified. This issue can be seen when comparing the two examples below. The first showcases the ideal tracking of the paper fiducial pattern, while the second example shows the faulty tracking of the real prototype finger.

Results

This package was ultimately applied on a real Franka Emika Panda manipulator arm actuating the sliding platform

from the test fixture. Though the fiducial tracking on the prototype finger needs some adjustment to better track

in non-ideal lighting conditions, the fundamental abilities of the system were shown to be functional and working

as intended.

With the package running, the user can use the various services provided by the motion_control node to control

the robot and perform various motion profiles with the testing rig platform. While the robot is running, the vision

node is identifying and tracking the fiducial pattern dots on the tip of the prototype finger and displaying these tracked

locations in the terminal. This system may serve as the first step in further investigations into the relationship between

manipulation and the deformation characteristics of a prototype fingertip, and ultimately may assist in the creation of

newer biomimetic fingers that provide desirable material properties.